Turn Predictive Insight into Competitive Advantage

Achieving Business Value with AI

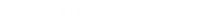

RapidMiner Studio

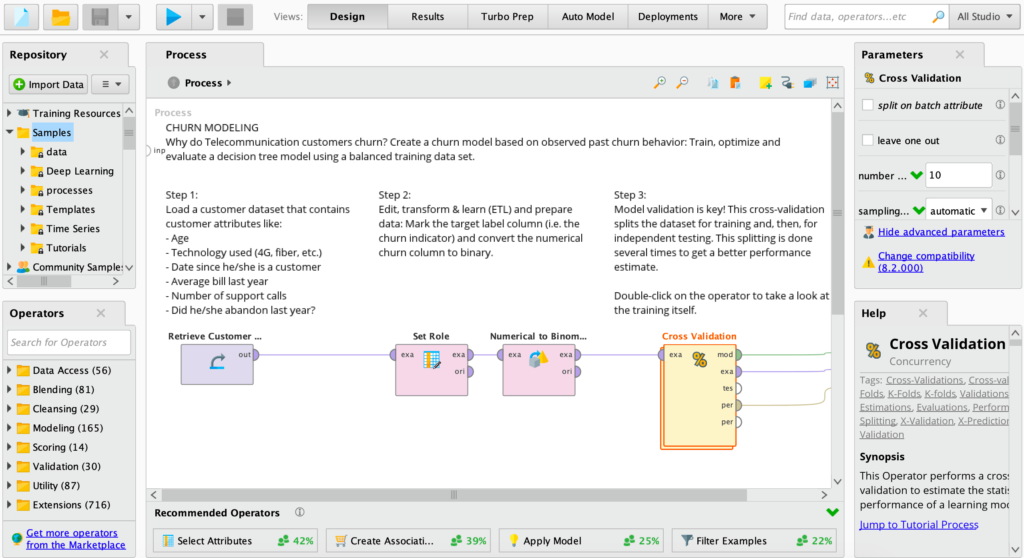

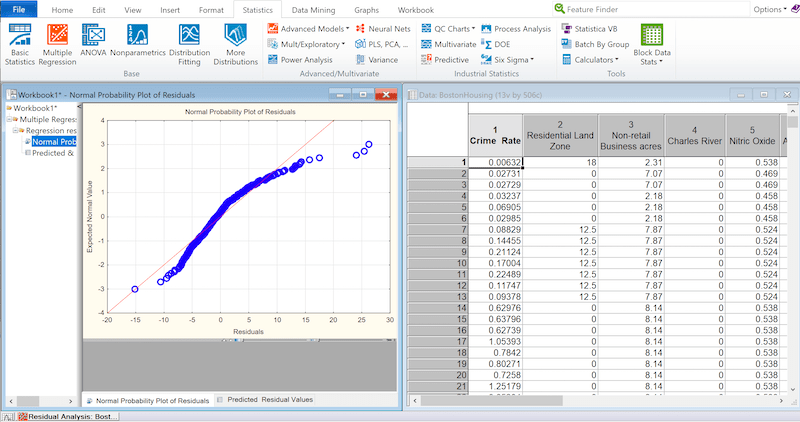

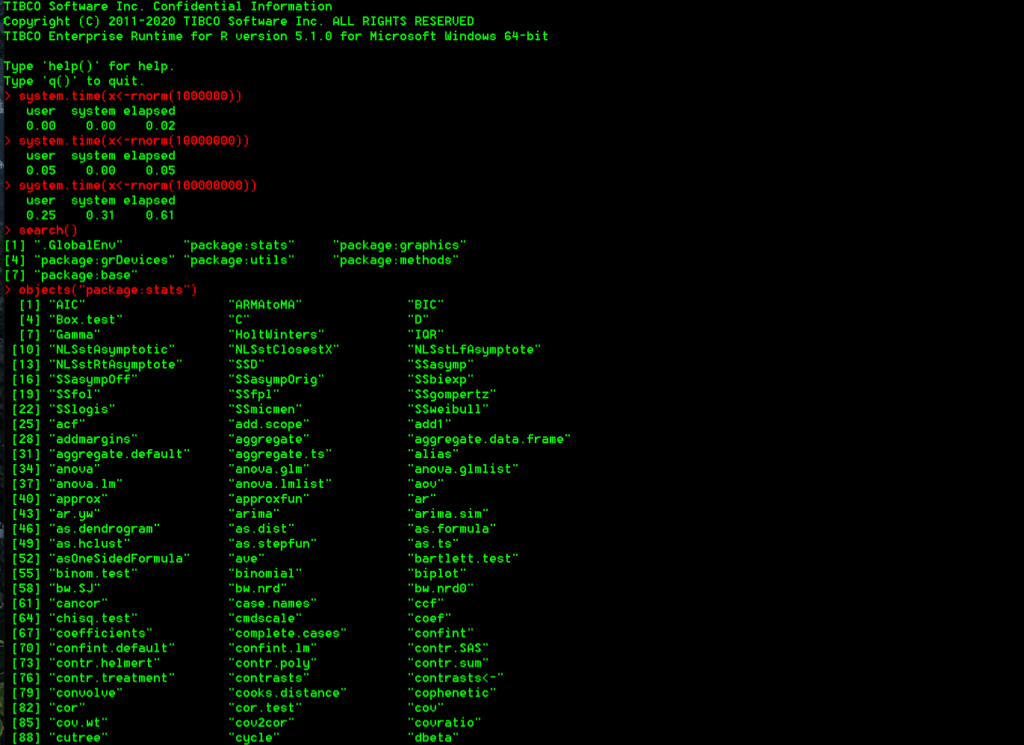

S+

Point/click access to advanced graphics and cutting edge statistics, without coding in R.

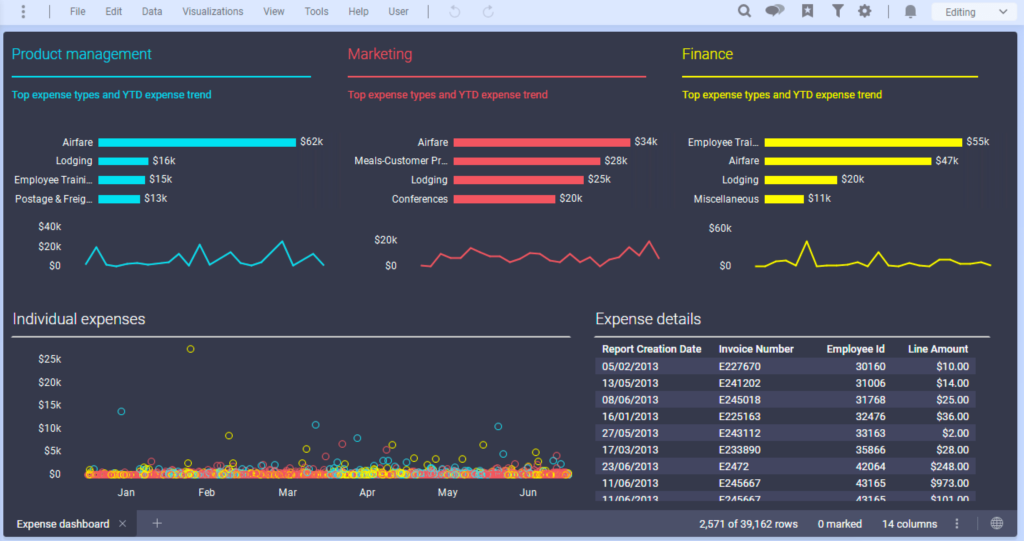

Spotfire

The only point/click data visualisation & predictive analytics dashboard, that contains an in-built data science engine, and is a recognised data prep leader by Forrester Wave.

Let’s create a plan for your specific needs

Lets Talk Data Science

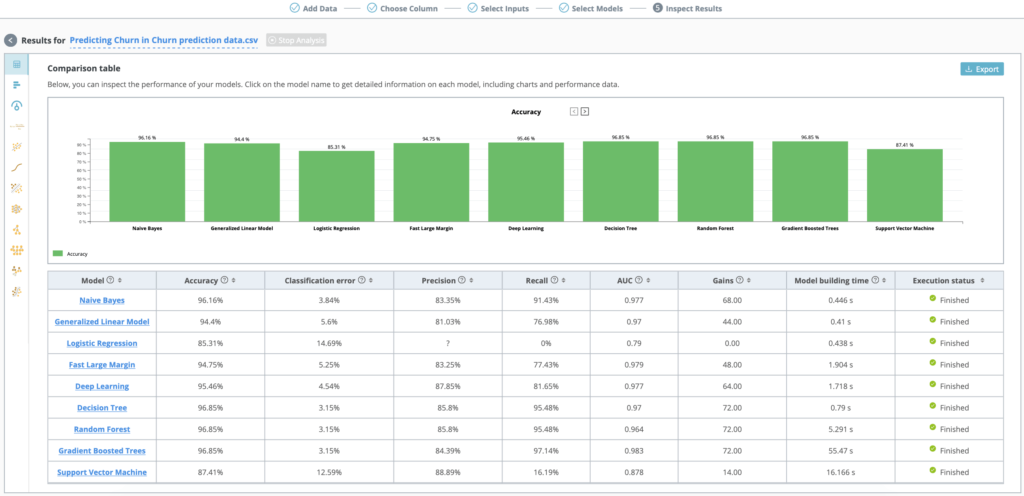

RapidMiner Go

Automated and guided machine learning web interface. Point/click data science for domain experts, business users and analysts.

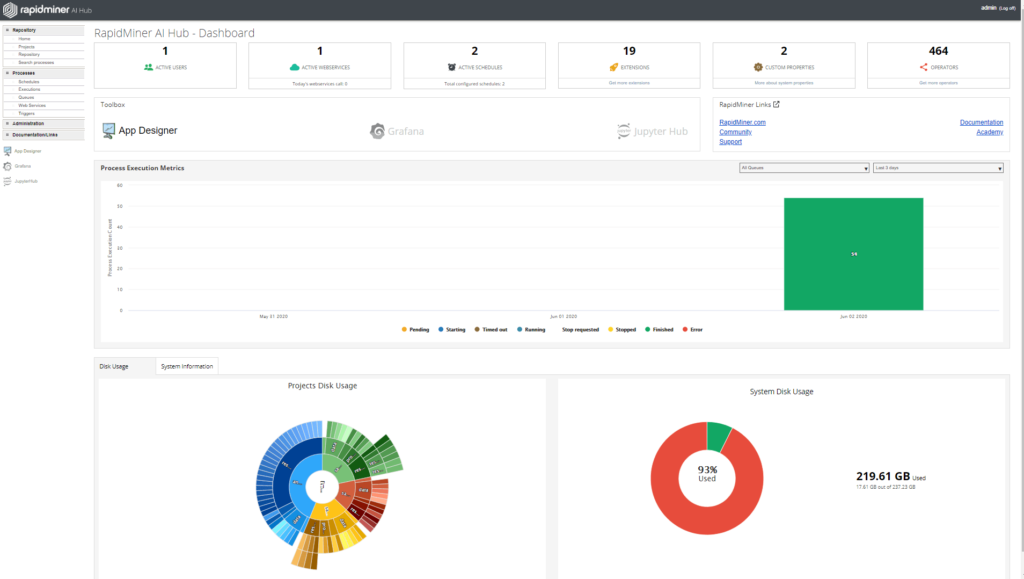

RapidMiner AI Hub

Automate processes, R and Python code, share and re-use predictive models, then deploy to production.

TIBCO Enterprise R

High-performance, enterprise-quality statistical engine. Brings speed, reliability and support to open-source R code

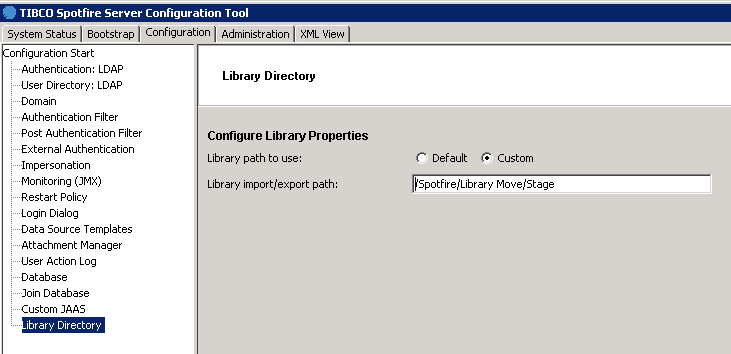

Spotfire Server/Analyst

Share Spotfire Analyst visualisations and predictive analytics dashboards, throughout the enterprise